Confidential Multi-Stakeholder Workflows

We show how to operate a workflow consisting of multiple services (a.k.a., stages). Each service executes inside of an enclave to protect its intellectual property (IP). The IP might encompass data and code stored in files. For example, the owner of a novel AI algorithm, implemented in Python, needs to protect the implementation from clients that might run them without paying for each execution and from competitors that might want to copy the algorithm. A service might need access to some expensive AI model, which needs to be protected from reverse engineering and using without paying.

We expect that services are containerized, i.e., service instances are deployed with the help of containers. Stakeholders might not only operate their own services, but they might also deploy services owned by other stakeholders. For example, a stakeholder SH3 might operate a confidential workflow using only services owned by other stakeholders, but the computed data might belong to SH3.

Problem Description

To simplify the problem description, we consider a straightforward workflow: Two stakeholders SH1 and SH2 providing a service S1 and S2, respectively. S1 produces some output files, and these files are the input of S2. Service S2 processes these files and writes some output files. All output files belong to a third stakeholder SH3. All services are deployed by SH3 and run inside of a cloud operated by a cloud provider CP.

Note that one can use our approach for general, confidential workflows:

- there might be more than three stakeholders involved,

- there might exist some maximum number of times a service is permitted to be executed (e.g., set by some need to prepay for executions),

- SH3 might also own some services of the workflow,

- there might be more general workflow graphs beyond a simple pipeline, and

- instead of volumes, services could communicate via TLS-protected communication.

Objectives

Our main objective is to protect the IP of the three stakeholders, SH1-SH3. The IP of a stakeholder must not be compromised: Neither by any of the other stakeholders, nor by the cloud provider, nor any other entity, even if that entity has root access to the host operating system or the Kubernetes nodes. The owner SH3 of the output files must have access to these, but the other stakeholders must not be able to communicate with their services. A stakeholder must still be able to limit the number of executions of a service. For example, SH3 might have only paid for three executions of S1, and hence, we need to enforce this.

Approach

We use SCONE confidential computing to protect the IP of SH1, SH2, and SH3. To prevent access of SH1 and SH2 to services S1 and S2, SH3 will use firewalls and sandboxing to ensure that S1 and S2 cannot communicate with the outside world. We support firewalling and sandboxing within SCONE. In addition, SH3 might decide to use standard sandboxing to execute S1 and S2.

Workflow Policy

Stakeholder SH1 protects the service S1 with a policy 1 under the control of SH1. In the same way, stakeholder SH2 protects the service S2 with a policy 2 under the control of SH2. Stakeholder SH3 connects the services with a third policy - which we call a workflow policy. Technically, this is just a SCONE policy that defines two encrypted volumes vol 1 and vol 2 and gives policy 1 the permission to access vol 1 and policy 2 the permission to access both vol 1 and vol 2.

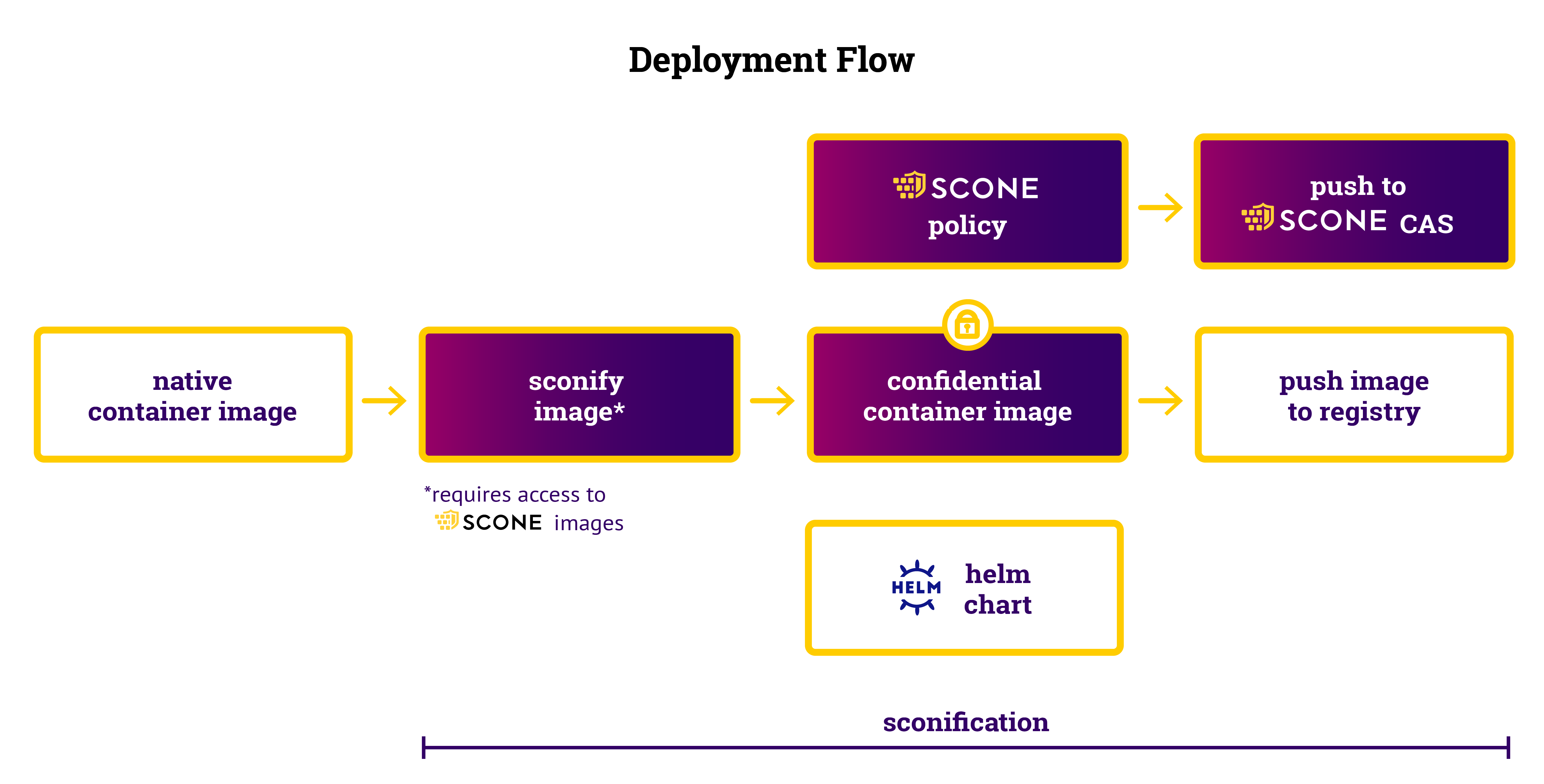

Services do not need to modified to become part of a confidential workflow. One can use our standard sconify_image to transform native service into confidential services.

Prerequisites

To be able to use these workflows, we need to grant you access:

- please register a free account,

- send an email to info@scontain.com to get access to

sconify_image, - to the code of this example, and

- to github repos containing the

helmcharts to deploy SCONE LAS, SCONE CAS, and optionally, the SCONE SGX Plugin. - set up your credentials

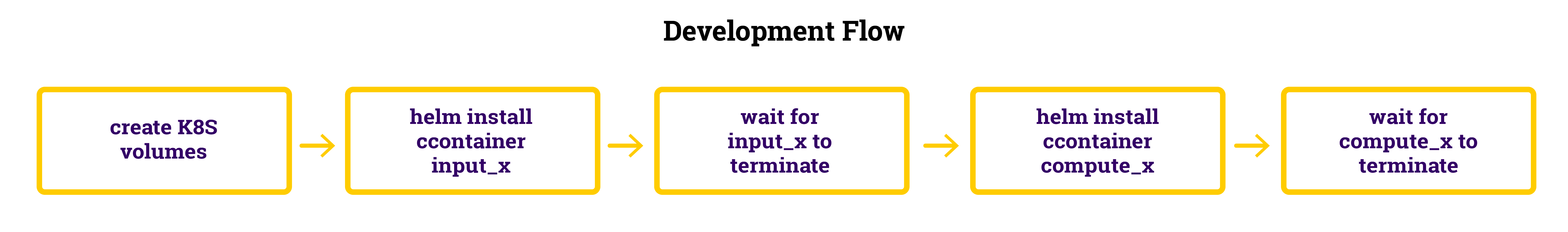

Development Flow

Running this example, you would need to clone the code:

git clone https://github.com/scontain/multi-stakeholder-workflow.gitAssuming that you defined your credentials already (setting your credentials), you can create the confidential images by executing:

./sconify-compute.shNote that in reality, all stakeholders would sconify their images separately because the stakeholders only want to give access to confidential images to other stakeholders. For simplicity, we perform this sconification of the images in a single script.

Deployment Flow

One can deploy this workflow to a Kubernetes cluster by executing:

./deploy-compute.shThe native Dockerfile passes a value 15 to the first container. This value is read from an encrypted volume and printed to the log. The tail of the output should therefore look like

15

DONE!As a next step, you could change the native Dockerfile (path containers/input-x/Dockerfile) to set a different value by

passing an option -v VALUE to the Python script. For example, you could set the command in the Dockerfile to

CMD [ "python3" , "/code/input-x.py", "-v", "20" ]After running sconify_compute again, the policy of the sconified input container will now specify that we pass option -v 20 to the input container:

services:

- name: input-x-service

image_name: input-x-service_image

mrenclaves: [09ed4f279ffa98ab045bde1ffcc2ca75f4f00b68fb8bd0d3ddb5430d1dbd7490]

command: "python3 /code/input-x.py -v 20"

environment:

SCONE_MODE: hw

SCONE_LOG: "7"

LANG: "C.UTF-8"

PATH: "/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

pwd: /In this case, the output of the computation will be 20 instead of 15.